|

| June 20, 2023 |

Artificial intelligence is often described as a "black box," which hides the reasons behind its decision-making. Revealing those reasons could teach researchers more about how these algorithms work. Now a new tool offers to help—at least partially. Read more in this week's top story. |

| | Sophie Bushwick, Associate Editor, Technology

| |

|

| Artificial Intelligence New Tool Reveals How AI Makes Decisions Large language models such as ChatGPT tend to make things up. A new approach now allows the systems to explain their responses—at least partially By Manon Bischoff | |

| |

| |

| |

| Privacy Secret Messages Can Hide in AI-Generated Media In steganography, an ordinary message masks the presence of a secret communication. Humans can never do it perfectly, but a new study shows it's possible for machines | | By Stephen Ornes,Quanta Magazine | | | |

| |

| |

| |

| |

| QUOTE OF THE DAY

"The CEO of OpenAI, Sam Altman, has spent the last month touring world capitals where, at talks to sold-out crowds and in meetings with heads of governments, he has repeatedly spoken of the need for global AI regulation. But behind the scenes, OpenAI has lobbied for significant elements of the most comprehensive AI legislation in the world--the E.U.'s AI Act--to be watered down in ways that would reduce the regulatory burden on the company" Billy Perrigo, TIME | |

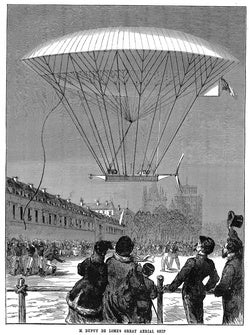

FROM THE ARCHIVE

| | | |

LATEST ISSUES

|

| |

| Questions? Comments?  | |

| Download the Scientific American App |

| |

| |